Debian Bullseye, hard drives not found at boot

2020-03-08

Why is a hard drive suddenly missing?

I have a Debian workstation computer that is very reliable and that I have been using for many years. I use Debian testing in a rolling release fashion. I am currently using Debian Bullseye/11 while it is in its testing phase.

After some recent updates in early 2020, when I rebooted the computer sometimes a random hard drive was not found. Often I could simply reboot and the problem would be solved. Other times I would reboot, and a different hard drive would now be missing!

Here are what the failures looked like in my logs, when the computer failed to load a backup drive called “drive1”.

systemd[1]: dev-mapper-drive1_lvm\x2dbackup.device: Job dev-mapper-drive1_lvm\x2dbackup.device/start timed out. systemd[1]: Timed out waiting for device /dev/mapper/drive1_lvm-backup. systemd[1]: Dependency failed for /mnt/backup. systemd[1]: Dependency failed for Local File Systems. systemd[1]: local-fs.target: Job local-fs.target/start failed with result 'dependency'. systemd[1]: local-fs.target: Triggering OnFailure= dependencies. systemd[1]: mnt-backup.mount: Job mnt-backup.mount/start failed with result 'dependency'. systemd[1]: Dependency failed for File System Check on /dev/mapper/drive1_lvm-backup.

Short Answer

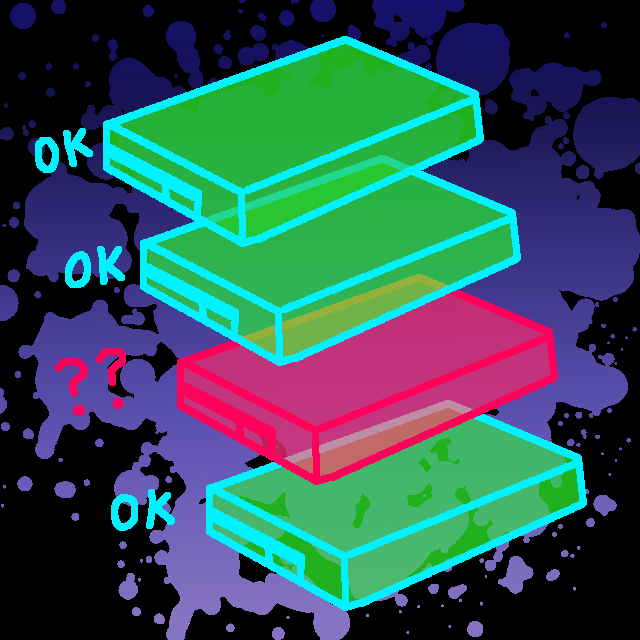

The short answer is it was a combination of me configuring the hard drives using their old Linux names, like /dev/sda1, and the fact that recent upgrades to the computer meant these names changed much more often. The solution was to configure the hard drives using a unique identifier, either the UUID or the PARTUUID.

Your drives might be configured in /etc/fstab or /etc/crypttab. If they are configured using the old Linux drive letters, they may look like this in /etc/crypttab:

drive1_crypt /dev/sda1 /etc/keys/drive1.iso plain... drive2_crypt /dev/sdb1 /etc/keys/drive2.iso plain... drive3_crypt /dev/sdc1 /etc/keys/drive3.iso plain...

Change the entries to use UUID, or PARTUUID, rather than the drive letters. You can get the unique identifier using the command blkid like this:

blkid /dev/sda1 /dev/sda1: PARTLABEL="drive1" PARTUUID="c7229a98-4814-4909-9a0e-7db88fbcf0b5"

You can then revise your /etc/fstab or /etc/crypttab along these lines:

drive1_crypt PARTUUID=c7229a98-4814-4909-9a0e-7db88fbcf0b5 /etc/keys/drive1.iso plain... drive2_crypt PARTUUID=b7270733-b19a-4f6e-8c58-80e21fed8a69 /etc/keys/drive2.iso plain... drive3_crypt PARTUUID=e48bf646-1397-41ac-8521-9c3b2f9104a8 /etc/keys/drive3.iso plain...

The unique identifiers are not as nice for humans to manage, but they are better in that they cannot go missing. And if the old drive letters are going to change on every boot, they are the only logical choice.

When I searched online for an answer to this problem, I found this nice article by Grégory Soutadé that helped me out of the mess:

Longer Story

I have been having fun with my Linux computers for many years, and had plenty of practice with naming conventions like /dev/hda or /dev/sda. That simple terminology became a way to name the drives. I have had this computer working reliably for many years using those shorter names. It was only in early 2020, after some system upgrades, that the drive letters started being renamed upon rebooting.

The problem showed up as hard drives intermittently going missing. Because sometimes the drive letters that the system selected matched the configuration files, and sometimes they did not. It was a scary problem at first, because it looks like a failing hard drive: one day, after many years, a hard drive does not show up. It became more of a mystery when first one drive seemed to be failing, then a different one, then back to the first one.

The problem was especially masked because I set up the same kind of cryptography on each drive. The same cryptography parameters succeeded in unlocking each drive, even if it was named wrong. But the next step could not proceed on an encrypted drive that was opened with the wrong key.

I heard many years ago that drive letters like /dev/sda were no longer going to be reliable, and that I should move to using UUID. But I never did because, well, the machine kept working with the old configuration and I liked the way it looked better. Today, when the computer didn’t boot after many tries, I finally got the message and switched to using UUID. :-)

kasploosh.com

kasploosh.com